To assess reliability and validity of the objectively structured clinical examination (OSCE) applied in postgraduate certification processes by the Mexican Board of Rheumatology.

MethodThirty-two (2013) and 38 (2014) Rheumatology trainees (RTs) underwent an OSCE consisting of 12 and 15 stations respectively, scored according to a validated check-list, as well as 300 multiple-choice question examination (MCQ). Previously, 3 certified rheumatologists underwent a pilot-OSCE. A composite OSCE score was obtained for each participant and its performance was examined.

ResultsIn 2013, OSCE mean score was 7.1±0.6 with none of the RTs receiving a failing score while the MCQ score was 6.5±0.6 and 7 (21.9%) RTs received a failing (<6) score. In 2014, the OSCE score was 6.7±0.6, with 3 (7.9%) RTs receiving a failing score (2 of them also failed MCQ) while the MCQ score was 6.4±0.5 and 7 (18.5%) RTs were disqualified (2 of them also failed OSCE). A significant correlation between the MCQ and the OSCE scores was observed in 2013 (r=0.44; P=.006). Certified rheumatologists performed better than RTs at both OSCE.

Overall, 86% of RTs obtaining an OSCE passing score also obtained a MCQ passing score, while this was only 67% (P=.02) among those who obtained an OSCE failing score.

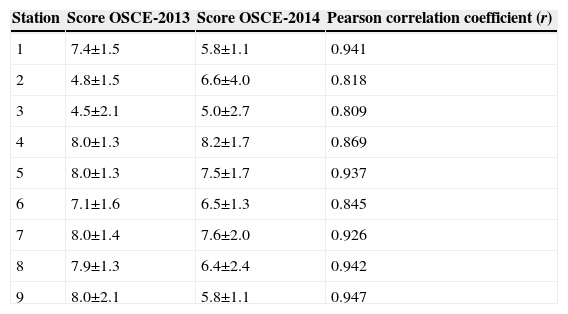

Nine stations were applied at both consecutive years. Their performance was similar in both certification processes, with correlation coefficients ranging from 0.81 to 0.95 (P≤.01).

ConclusionThe OSCE is a valid and reliable tool to assess the Rheumatology clinical skills in RTs.

Determinar la validez de constructo y la confiabilidad de un examen clínico objetivo estructurado (ECOE) en la evaluación de una certificación nacional como reumatólogo.

MétodoEn 2013 y 2014, se aplicaron sendos ECOE y evaluación teórica (ET) a 32 y 38 residentes aspirantes a la certificación de reumatólogo, respectivamente. Se incluyeron 12 y 15 estaciones calificadas mediante lista de cotejo validada. Previamente, 3 reumatólogos certificados realizaron sendas pruebas piloto. Se calculó la puntuación global del ECOE y se evaluó su desempeño.

ResultadosEn 2013, la media ± DE del ECOE fue de 7,1±0,6) y ningún aspirante tuvo calificación reprobatoria (CR); la media de la ET fue de 6,5±0,6 y 7 aspirantes (21,9%) tuvieron CR (<6). En 2014, la media del ECOE fue de 6,7±0,6) y 3 aspirantes (7,9%) tuvieron CR, de los cuales 2 reprobaron la ET; la media de la ET fue de 6,4±0,5) y 7 aspirantes (18,5%) tuvieron CR, 2 de los cuales reprobaron el ECOE.

En 2013, la correlación entre el ECOE y la ET fue de r=0,44, p=0,006. En ambos años, los reumatólogos certificados obtuvieron mejores calificaciones en el ECOE que los residentes. El porcentaje de aprobados en la ET fue mayor entre quienes aprobaron el ECOE que entre quienes lo reprobaron: 86% vs. 67%, p=0,02.

Se aplicaron 9 estaciones en ambos años y sus puntuaciones mostraron correlación de 0,81 a 0,95, p≤0,01.

ConclusiónEl ECOE es una herramienta adecuada para evaluar las competencias clínicas de los aspirantes a la certificación.

The Boards of Medical Specialties are collegial bodies formed by prominent medical professionals who cultivate the same subject and who establish a certification and recertification process. The Mexican Board of Rheumatology, AC (CMR), was founded on February 10, 1975. It currently consists of 12 advisors. Since its inception, the CMR has certified 730 adults and 49 pediatric rheumatologists through an examination, which consists of a theory and a practical part. Until 2012, the practical evaluation was carried out on a real patient, in the form of a single case.

The certification exam of any medical specialty is considered a high-stakes exam, so boards must do everything possible to ensure the assessment tools are made with an appropriate technical framework and educational professionalism.1 The examination of a real case or a single case evaluates medical performance in real settings, verifying the physicians capabilities for obtaining helpful information, making appropriate decisions, integ scoring previous knowledge and discussing views. However, it is poorly reliable and reproducible.2 The objective structured clinical examination (OSCE) is a highly objective assessment tool that allows to examine skills and a large number of skills, leading some authors to consider it the gold standard for assessing clinical competencies.3–6 Since 1975, the OSCE has been used in Canada, the United States, Australia and Europe to evaluate undergraduate clinical skills. In Mexico, it is used since 1996 as a summative exam at the Faculty of Medicine of the Autonomous University of Mexico and as the professional practice examination phase of the undergraduate course since 2002.7 In addition, the OSCE has also shown good results in evaluating12 general physicians,8,9 surgeons, pediatricians,10 and rheumatologists11 musculoskeletal ultrasound skills.

Since its inception, the CMR has recognized that clinical competence is a crucial training aspect of any future rheumatologist. Two years ago, the CMR implemented an OSCE in order to apply it to applicants as part of their annual certification process. This paper describes the process and examines the validity (construct) and reliability of the OSCE.

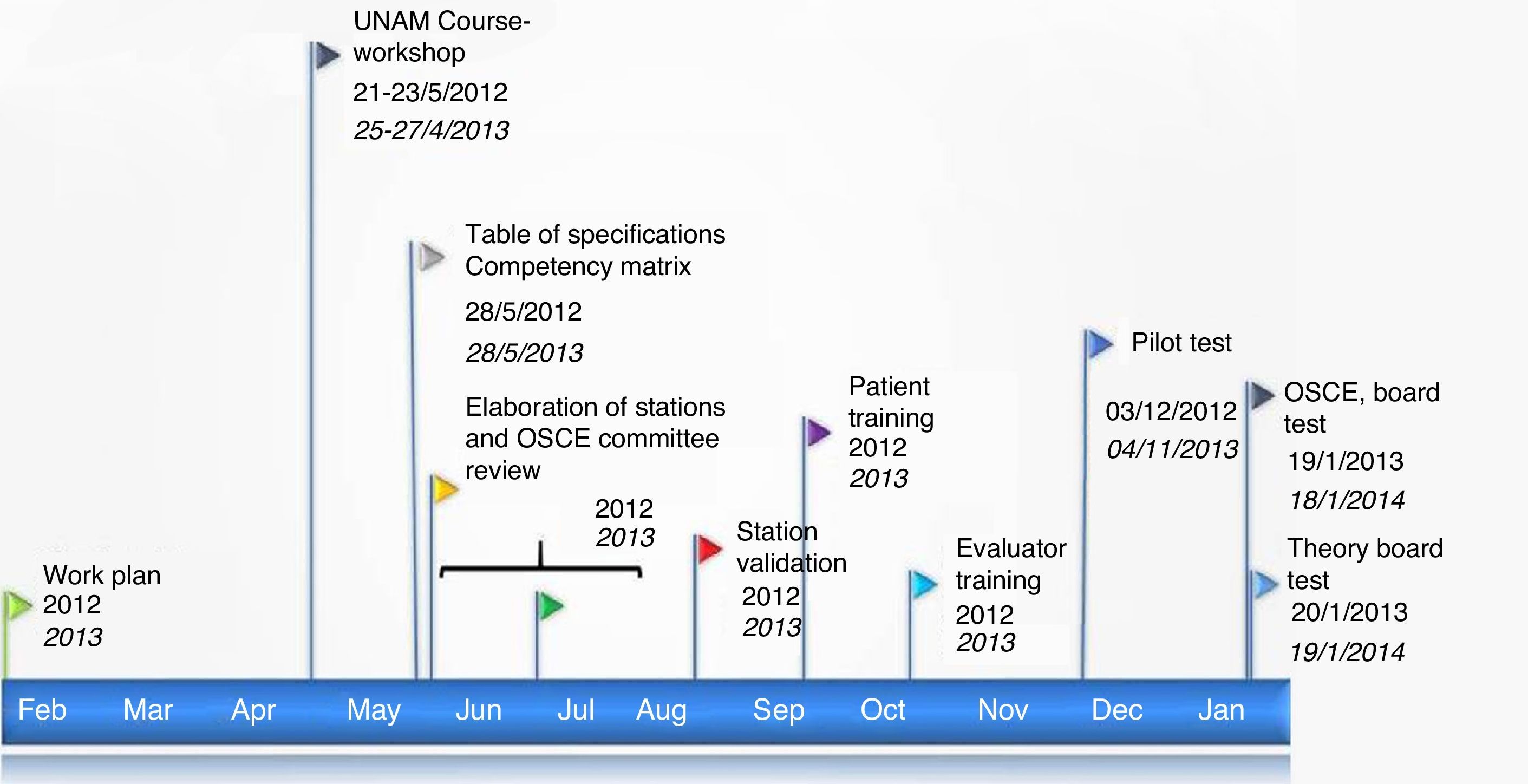

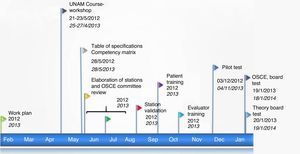

Materials and MethodsPlanning the Objective Structured Clinical Examination (Fig. 1)In February 2012, the CMR implemented a work plan to develop an OSCE, which was applied for the first time in January 2013, during the examination for certification as a rheumatologist. An OSCE committee was composed by four counselors, who on 21 May 2012 accredited a Course-Workshop on Assessment of Competence using the Objective Structured Clinical Examination, conducted within the teacher training program of the Department of Medical Education of UNAM.

In late May, the CMR prepared a table of specifications and a skills matrix, using the academic content of the Rheumatology course13 and considering the estimated prevalence in our country14 of rheumatic diseases. The final proposal included the design of 14 stations, with well-defined7 requirements.

Three committees were integrated in parallel:

- -

Station Validation Committee. A 3h session was programmed, in which its purpose was defined, the content of each station presented and the checklist (agreement ≥80%) was validated. 12 of the 14 stations were validated.

- -

Patient Training Committee, which selected and trained 18 patients.

- -

Committee for training evaluators. 7 counselors and 5 rheumatologists with over 10 years of experience were selected. All received indications on the role and behavior of the evaluators during the OSCE.

The pilot test was conducted in December 2012 at the purpose-built premises of the Faculty of Medicine, UNAM. A previously validated circuit with 12 stations was designed by three certified rheumatologists with less than 3 years experience.

On 19 January 2013 the first OSCE assessment was applied as a practical exam for certification by the CMR. On January 20, the theory test was applied. Examination of the CMR for the following year took place on 18 and 19 January 2014, in its theory and practical versions (OSCE), respectively. The design and the preparation thereof were similar to that described above.

Design of the Objective Structured Clinical ExaminationThe circuit for the 2013 assessment consisted of 12 stations to evaluate applicants and 4 rest stations; the 2014 circuit was integrated with 15 evaluation stations and 5 rest stations. All stations lasted 8min. The pathologies addressed were Sjögren's syndrome, low back pain, progressive systemic sclerosis, rheumatoid arthritis, inflammatory myopathy, gout, shoulder pain, systemic lupus erythematosus, antiphospholipid syndrome, seronegative spondyloarthropathies and osteoarthritis.

Population to Which the Objective Structured Clinical Examination and Theory Test was AppliedThe first version of the OSCE was applied to 32 and the second to 38 candidates who met the requirements of the CMR to undergo testing and had completed their education in rheumatology at different hospitals recognized for that purpose around the country.

Theory Test Board ExamThe theory examination consisted of an instrument of 300 multiple choice questions, of which 250 were clinical cases consisting of 5 questions each and 50 questions from different subjects of immunology, in a multiple-choice format.

Score for CertificationThe theory and practical tests were scored independently.

Each station was scored by adding the scores for the checklist and projected unto a decimal scale. Subsequently, an arithmetic mean was calculated considering the value of each station and the overall score of the OSCE was determined.

In 2013 no minimum score was considered to certify the OSCE and the CMR considered a score of ≥6 as a passing score.

In the year 2014, a value ≥6 was considered as a passing score for the OSCE (and the same value was used as a passing score for the theory test).

Statistical AnalysisDescriptive statistics were performed and appropriate distribution tests for the variables were used.

Construct validity: the written test score and the OSCE were correlated through the Pearson correlation coefficient. The correlation was evaluated based on the score of the theory examination and the overall OSCE. The theory test scores were divided into quartiles and distribution of applicants who had a passing and non-passing score determined in different quartiles and a Wilcoxon test was performed. Finally, the score of each OSCE station was compared between applicants and certified rheumatologists using the Student's t test (2-tailed).

To detect potential redundancy through the use of multiple stations, the interstation correlation was calculated from the total OSCE score of each participant and the score of each station, using Pearson correlation coefficients.

Analyses were performed using the SPSS 20 Statistical Package.

ResultsCertification Exam Score2013 (32 candidates): The overall score of the OSCE in the group was (mean±SD) 7.1±0.6; all applicants had a score ≥6. The general theory test score was 6.5±0.6; 25 applicants (78.1%) had a score ≥6.

2014 (38 candidates): The overall score of the OSCE was 6.7±0.6; 35 candidates (92.1%) had a passing score (≥6). Of the 3 candidates who had a non-passing score in the OSCE, 2 of them also had a non-passing score on theory. The general theory test score was 6.4±0.5 and 31 candidates (81.6%) had a passing score (≥6). Of the seven candidates who failed theory, 2 also failed the OSCE. Finally, 30 candidates had a passing score on both tests (78.9%).

In 2013, scores (mean±SD) for each station varied from 4.5±2.1 to 8.8±2.0 and in 2014 it varied from 5.0±2.7 to 8.2±1.7. In 2013, the percentage of applicants with a score ≥6 ranged from 18.8% to 96.9%, depending on the station, while in 2014 it was 36.8%–100% (Table available online).

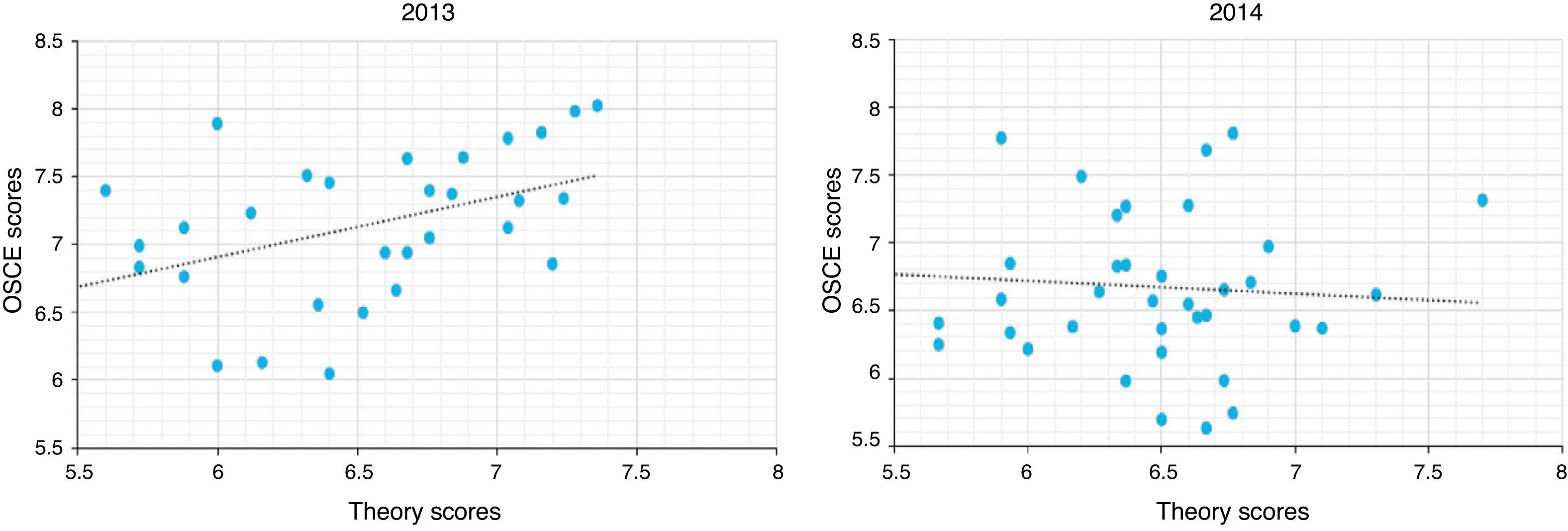

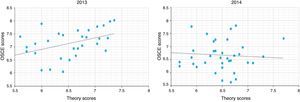

ValidityThe scores obtained by each person examined were correlated with the overall OSCE and the score of the theory examination. In 2013, a moderate and significant correlation (r=0.436) and (P=0.006) was found, whereas in 2014 the correlation was poor and not significant (r=0.203; P=NS) (Fig. 2).

The validity of the OSCE was also assessed by the experience construct by comparing the scores of applicants with board certified rheumatologists with 2 and 3 years of experience. The scores obtained by the latter were significantly higher than those of the candidates in 8 of the 12 stations that formed the 2013 circuit and in 10 of the 15 stations that constituted the 2014 circuit (Table published online).

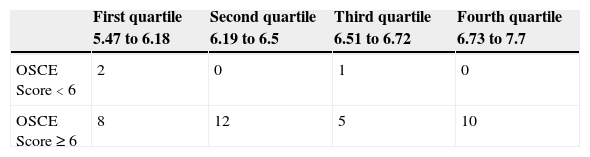

AgreementTheory test results were divided into quartiles and the number of applicants with a passing and non-passing OSCE score was determined in each quartile (only during 2014, as in 2013 no student failed the OSCE). The results are presented in Table 1. The pass rate in the theory test among the candidates who failed the OSCE and those who had a passing score was compared in the OSCE: 67% vs 86%, P=0.02 (chi-square). Finally, the same trend was observed when the theory test scores were compared; the average score of the 3 candidates who failed the OSCE was lower than the 35 candidates who had a passing score: 5.9±0.6 vs 6.5±0.45, P=0.2 (Wilcoxon test).

Reliability-reproducibilityGroup performance of the persons examined in 2013 (No.=32) and 2014 (No.=38) in the 9 stations that were repeated in both years was compared. Missing data due to the applicant differences between the two years were assigned according to the group average. Correlations between scores on both OSCE were good and showed r values >0.8 and P≤0.01 (Table 2).

Comparison of the Scores of the Stations Implemented in the Objective Structured Clinical Examination in 2013 and 2014 (P≤0.01).

| Station | Score OSCE-2013 | Score OSCE-2014 | Pearson correlation coefficient (r) |

|---|---|---|---|

| 1 | 7.4±1.5 | 5.8±1.1 | 0.941 |

| 2 | 4.8±1.5 | 6.6±4.0 | 0.818 |

| 3 | 4.5±2.1 | 5.0±2.7 | 0.809 |

| 4 | 8.0±1.3 | 8.2±1.7 | 0.869 |

| 5 | 8.0±1.3 | 7.5±1.7 | 0.937 |

| 6 | 7.1±1.6 | 6.5±1.3 | 0.845 |

| 7 | 8.0±1.4 | 7.6±2.0 | 0.926 |

| 8 | 7.9±1.3 | 6.4±2.4 | 0.942 |

| 9 | 8.0±2.1 | 5.8±1.1 | 0.947 |

Cronbach's alpha of certified rheumatologists who conducted the pilot test in both years (considering the 9 repeated stations) was 0.83–0.92.

Finally, the average interstation correlation (comparison of the score for each station within each participant) was low for both years: r=0.17, range=−0.11 to 0.35 by 2013 and r=0.18, range from −0.20 to 0.57 by 2014.

DiscussionThe paper describes the design, development and implementation of an OSCE to assess clinical skills in the Rheumatology Board Annual certification applied by the CMR. In recent years, it has been proposed that the OSCE is a more objective tool for assessing clinical skills, especially when compared with other modalities, as single clinical cases. However, its limitations are recognized, so it must be subjected to rigorous scrutiny and pilot testing as a prerequisite step to its application.3

OSCE was designed by a collegial panel of experts, who applied international recommendations for its development,15 among which the prior establishment of a table of specifications, validation of the stations by a group of experts and the application of a pilot test stand out. The OSCE assessment was performed with reference to a criterion, as a standard was set in advance, as recommended by the current trend of competency-based education (www.conacem.org).

The construct validity of the OSCE was analyzed in several ways. First, the performance of the OSCE with the theory part of the certification exam was correlated. In the literature, significant correlations between the OSCE and theory evaluations have been described, with values ranging between 0.59 and 0.71,16–18 although there is little information in the field of rheumatology.11,12 A recent report published by Kissin et al. described a correlation of 0.52 (P≤0.001) between a n9 station circuit designed to assess musculoskeletal ultrasound skills and its corresponding theory part12; conversely, Raj et al. found no significant correlation between the theory and practical knowledge, when the latter was assessed by 2 stations for exploration of the hand and knee applied to medical students.19 We found a correlation of 0.44 in 2013, with no correlation in 2014. This could be related to a worse performance of the candidates in the theory test in 2014 and/or the fact that both assessments (theory and practice) measured different knowledge. It should be noted that finding a high correlation between the 2 assessments indicates that only a small gain in information concerning the knowledge of the applicant is obtained when both instruments are applied; a plausible hypothesis is that, in situations of high correlation between both, the OSCE explores (like the theory test) “knowledge” and “know how” and shuns the “show how” of the Miller pyramid.20 In relation to this, Matsell et al.10 identified a key element in construct validity for an OSCE when it is based on the high correlation with a written evaluation. From a theory point of view, the OSCE assesses a wide variety of skills/clinical competencies, so the authors propose stations categorized into 4 major domains (knowledge, problem solving, clinical skills and patient management) and correlating stations for each domain with the corresponding theory part. This proposal, which has great theoretical foundation, will be included in the forthcoming implementation of OSCE.

Regarding construct validity, the experience construct was also analyzed; a valid assessment should show how more experienced candidates perform better.21 All applicants had similar training in years. However, the same circuit was applied to 3 newly certified rheumatologists, under similar test day conditions. In general, the latter had higher scores than the applicants.

Finally and indirectly, construct validity was analyzed in 2014 comparing the pass percentage of those passing the theory test (and the average score) in those who passed or did not pass the OSCE. Our results support the validity of the instrument.

With respect to reliability, no evaluation was performed, although 3 ways have been proposed to increase the reliability of the OSCE and all of them were implemented: short station design to allow evaluation of multiple clinical situations, evaluation of the stations through predetermined checklists and integration of circuits with different stations, evaluators and17 simulated patients. In our case, patients selected were real patients (except for 2 actors); however, they received training booklets designed to represent the condition of each station and reviewed by an expert panel. All this increases the reliability of the OSCE without affecting its validity, as when simulated patients or actors are used. In addition, 9 stations were applied in 2 consecutive years and showed excellent stability between the 2 groups of aspirants and internal consistency between certified rheumatologists in the pilot test was excellent. Finally, while a low interstation correlation was found, this indicates that each station evaluated different skills or competencies and therefore discarded redundancy of our instrument. It is important to emphasize the need to include an adequate number of stations to not affect the reliability of the OSCE.3,22 In the one similar exercise (that we know of), the exercise circuit consisted of 12 stations.11

Probably one of the biggest benefits of an OSCE (and other similar evaluations) is the ability to identify deficiencies in the training of residents and eventually modify the curriculum. It is therefore essential to have an optimal assessment tool. While it is true that the performance of our instrument was adequate, we must not forget that the OSCE is ultimately an approximation to the real problems but not a real physician-patient encounter. Our experience is limited to two years, but it is recommended that any instrument be used for 3–5 consecutive years, allowing proper calibration thereof. Furthermore, the OSCE was not reproduced in full in consecutive years. With regard to the assessment of the stations, no scientific method was applied to establish the passing score for each station,23 but some previous criteria were established. The OSCE applied to certified rheumatologists (pilot) was developed on a different day than the OSCE applied to applicants, although an effort was made to reproduce the conditions of the final evaluation. The implementation of OSCE required financial and human resources; when performing the exercise to define the costs, these amounted to 120000 pesos (about $9200), a figure which is not excessive but much greater than what is usually invested in the practical assessment of residents and could affect its long-term viability.

In conclusion, the OSCE designed by the CMR showed adequate construct validity and reliability, so it can be integrated as an instrument for assessing clinical skills in the board examination in order to obtain a certification as a rheumatologist. Certification boards have a social responsibility and also a responsibility to the medical community, documenting that specialists are competent to exercise their practice. It is therefore necessary to incorporate rigor, methodological quality and transparency to the certification processes. In parallel, society must require greater professionalism. The effort of the whole of society, the medical community, patients and the competent authorities must have a common goal to help increase the quality of care our population receives.

Ethical ResponsibilitiesProtection of people and animalsThe authors declare this research did not perform experiments on humans or animals.

Data privacyThe authors state that no patient data appears in this article.

Right to privacy and informed consentThe authors state that no patient data appears in this article.

Conflict of InterestThe authors declare no conflict of interest.

Please cite this article as: Pascual Ramos V, Medrano Ramírez G, Solís Vallejo E, Bernard Medina AG, Flores Alvarado DE, Portela Hernández M, et al. Desempeño del examen clínico objetivo estructurado como instrumento de evaluación en la certificación nacional como reumatólogo. Reumatol Clin. 2015;11:215–220.